A recurrent network which segments an unlabeled externally timed sequence of data is presented. The proposed method uses a Bayesian learning scheme earlier investigated, where the relaxation scheme is modified with a few extra parameters, a pairwise correlation threshold and a pairwise conditional probability threshold. %These can make units with low correlation or low conditioned %probability to inhibit instead of excite each other. The method studied is able to find start and end positions of words which are in an unlabeled continuous stream of characters. The robustness against noise during both learning and recall is studied.

The segmentation problem is fundamental in pattern recognition.

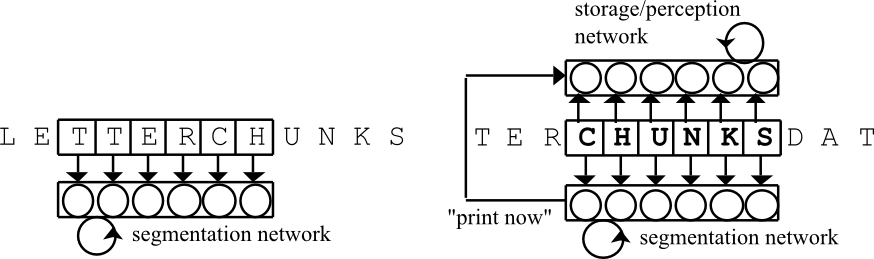

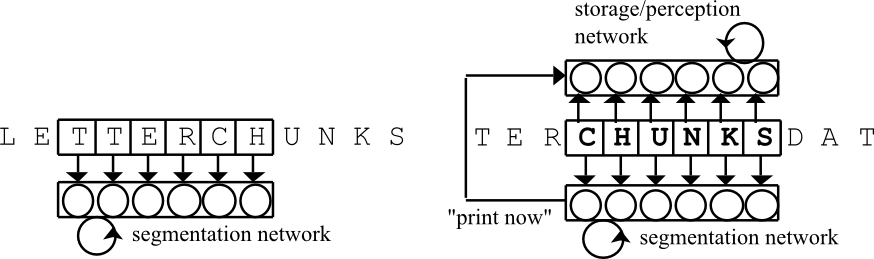

Given data with a sequential/temporal behaviour this shows up as the

temporal chunking problem

which may be illustrated with the following sequence: